The First 70B Thai LLM.

OpenThaiGPT is a volunteer group that has created an open-source model named OpenThaiGPT (OTG).

They have continued training a large language model (LLM) from an open-source model, and the resulting model has scored nearly as high as Claude Sonnet on certain benchmarks.

Launch date

On April 8, 2024, they published an open-source (Apache-2.0) large language model (LLM) for the Thai language. It includes versions with 7 billion, 13 billion, and 70 billion parameters, based on the Llama-2 model.

The model has been fine-tuned for several use cases such as instruction following, chatbots, and Retrieval-Augmented Generation (RAG).

Highlight

Performance

The highlight is that they claim the performance of the OpenThaiGPT-70b (OTG-70b) model on the Thai benchmark is nearly as high as Claude Sonnet.

We have also reproduced some benchmarks against OpenAI models. The result is that OTG-70b scored between the levels of GPT-3.5 and GPT-4.

Float16.cloud have reproduced the benchmark using the M3Exam.

| Model name | English (M3Exam) | Thai (M3Exam) |

|---|---|---|

| OTG-7b | 40.92 % | 25.14 % |

| OTG-13b | 53.69 % | 36.49 % |

| OTG-70b | 72.58 % | 48.29 % |

| GPT-3.5-turbo-0613* | - | 34.1 % |

| GPT-4-0613* | - | 56.0 % |

The GPT score reference is from the Typhoon-7b report.

Tokenizer

They have added 10,000 words to the model's dictionary. This has led to the model being significantly faster than OpenAI's models for the Thai language, and it consumes tokens at a ratio of approximately 1/3 compared to the OpenAI models.

You could try the tokenizer on float16 tokenizer.

About OpenThaiGPT

OpenThaiGPT has received sponsorship from several government agencies, private companies, and research institutions.

The datasets

The datasets used include both private and public sources. The total number of Thai word tokens they used for the full training is 65 billion, and they fine-tuned the model on over 1 million Thai instruction examples.

Infrastructure

The infrastructure they are using is a supercomputer in Thailand called LanTa. LanTa is ranked 94 on the Top500 list and has 176 nodes, each with 704 Nvidia A100 GPUs.

Huggingface card

Interested ?

Float16 is an AI inference platform that aims to support AI models for the Asian market.

We have developed advanced optimization techniques for non-English large language models (LLMs) that allow us to charge the same prices as world-leading providers, similar to Together.ai.

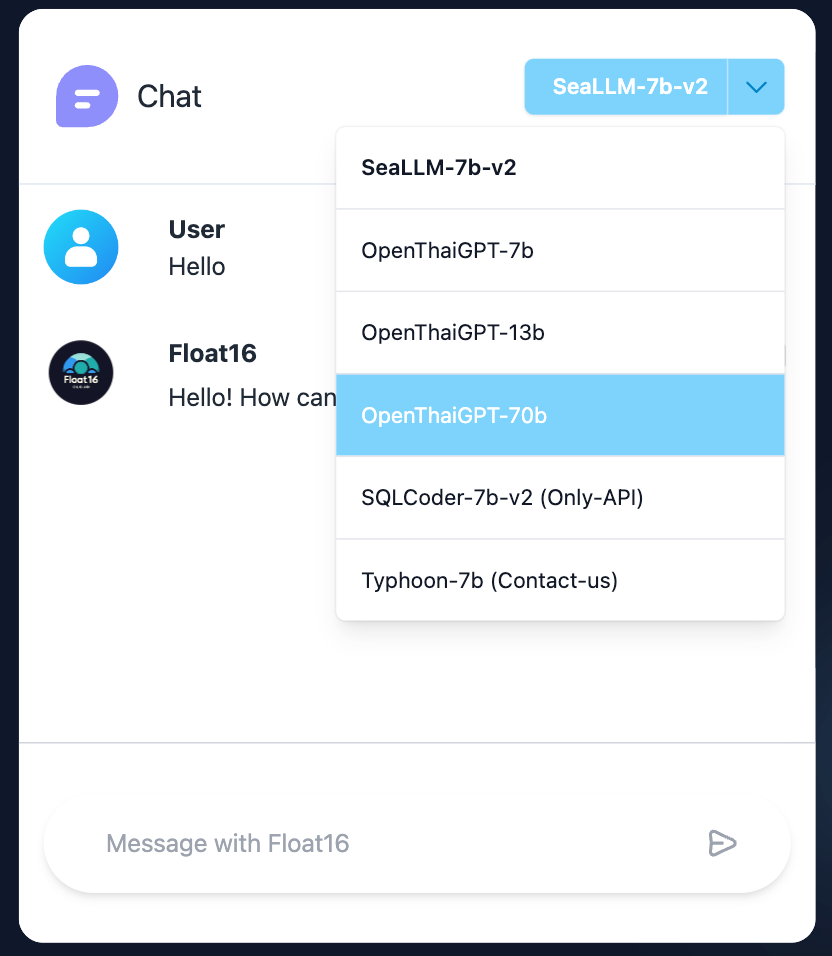

Try with chat.

Float16.cloud has supported the OTG series since day one.

You can try the OTG series models on the chat interface at float16.cloud.

Developer resource.

| Model name | Input token | Output token |

|---|---|---|

| openthaigpt-7b | $0.2 | $0.2 |

| openthaigpt-13b | $0.3 | $0.3 |

| openthaigpt-70b | $0.9 | $0.9 |

*Pricing for 1 Million tokens.